Text Emotion Classification with BERT

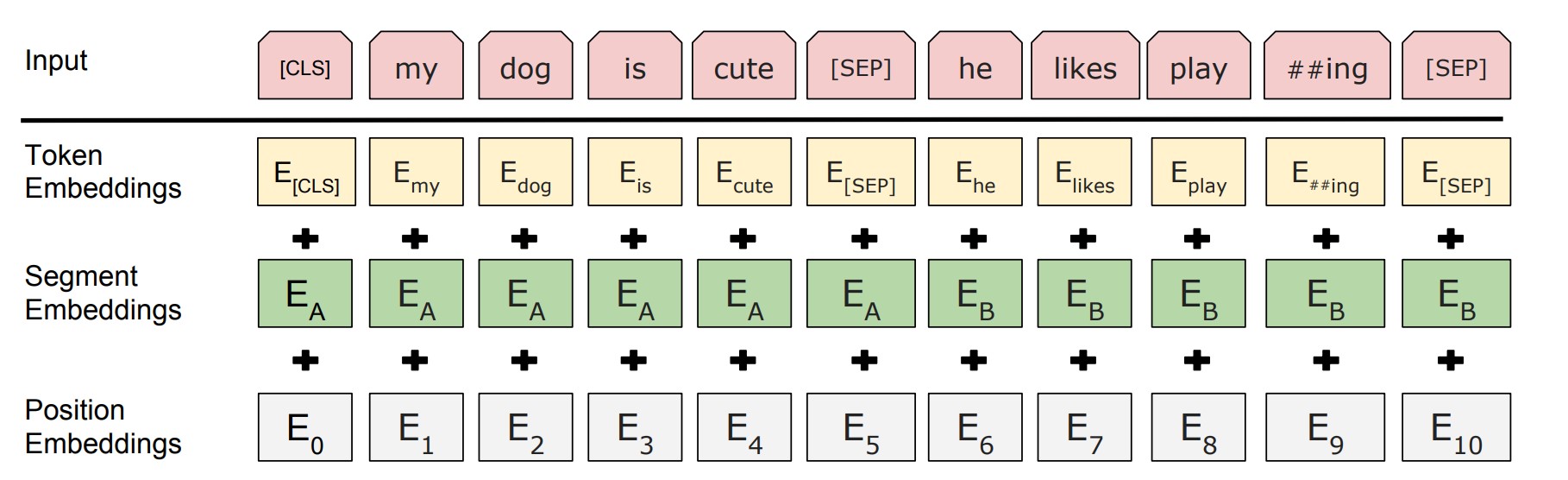

In our multimodal emotion detection project, text emotion classification plays a crucial role. In this task, we employ the power of BERT (Bidirectional Encoder Representations from Transformers) to classify emotions in text data. BERT is a formidable natural language processing (NLP) model known for its remarkable performance and versatility.

Introduction to the BERT Model

BERT, based on the Transformer architecture, stands out due to its bidirectional encoding, allowing it to consider both left and right context of words simultaneously. In our project, we employed a V100 GPU and fine-tuned the BERT model for five epochs to perform emotion classification on textual data.

Experimental Results

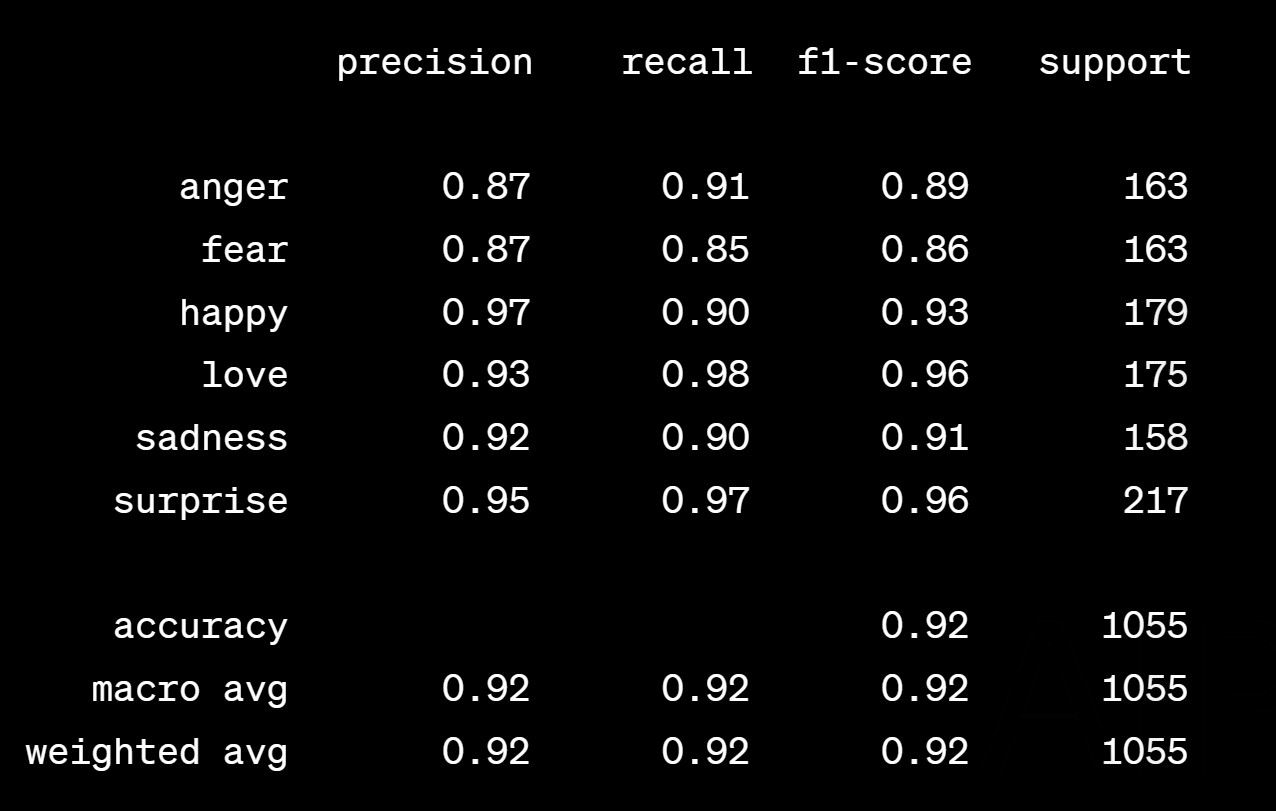

On our test data, we achieved inspiring results as follows:

Precision, recall, and F1-score classification report:

Testing accuracy: 0.9213

Analysis

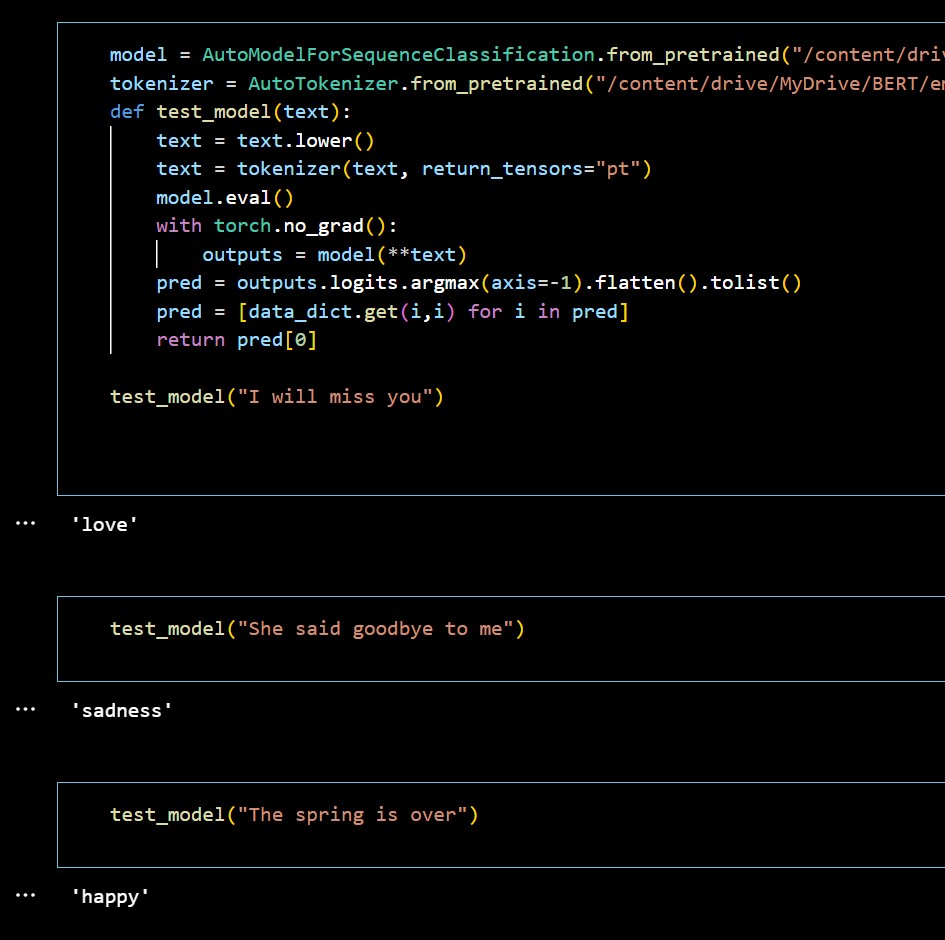

These results highlight BERT's excellent performance in text emotion classification. For most emotionally strong sentences, the great effect of BERT is obvious. However, emotion classification is not without its challenges.

For instance, certain sentences, such as "The spring is over," may require additional context or individual differences to accurately classify emotions. Depending on a person's preference for seasons, this sentence might evoke either happiness or sadness. This underscores the impact of context and individual factors on text-based emotion classification.

To better understand and capture emotions, we plan to expand our multimodal emotion detection project to include audio tone and facial expression data. By combining text, audio tone, and facial expression information, we aim to enhance emotion recognition accuracy. If successful, we will proceed to the integration phase, combining multimodal data. However, if challenges arise, we will explore methods such as hybrid learning to improve the accuracy and comprehensiveness of emotion analysis.

This project represents our commitment to the fields of NLP and emotion analysis. We will continue to work diligently to improve our methods and technologies for a better understanding and recognition of emotions.

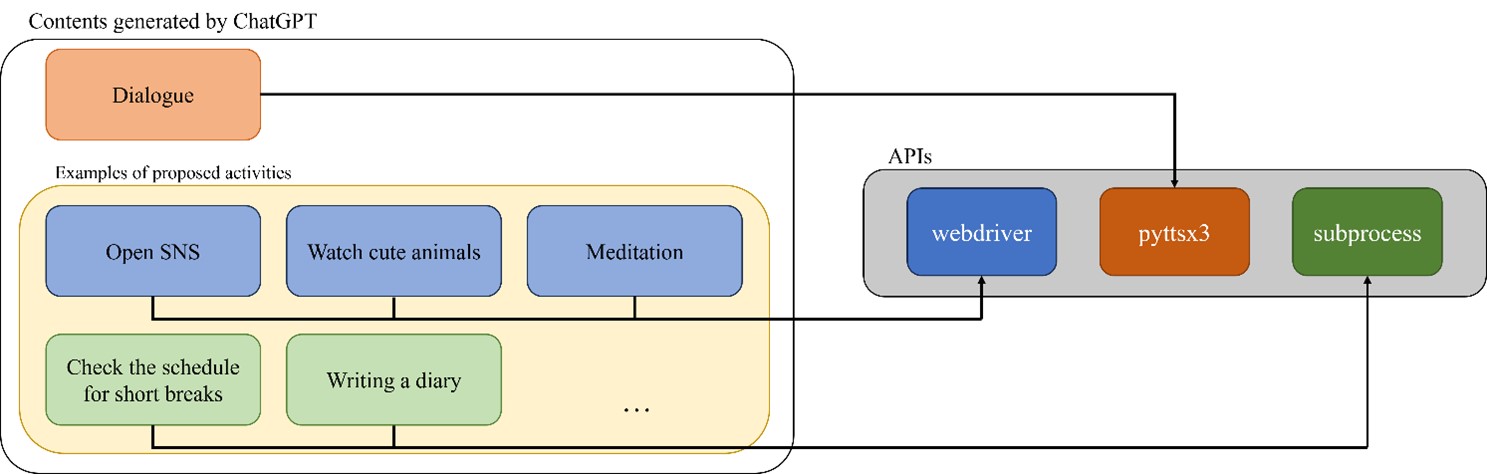

API organization based on the proposed activity

After receiving the user's emotional analysis result, ChatGPT generates an activity proposal and an appropriate dialogue according to the user's emotional state.

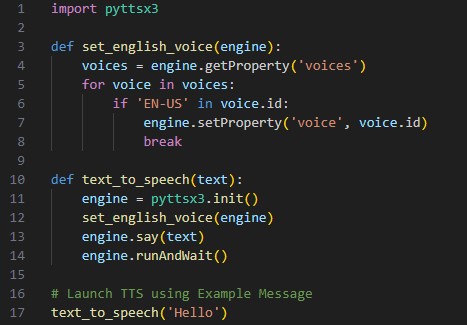

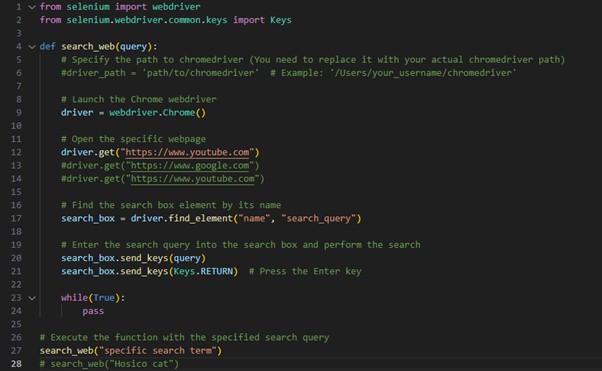

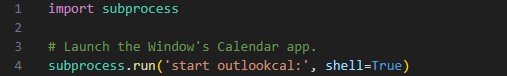

ChatGPT's direct conversation with the user is converted into a voice message through the pyttsx3 API. And the activity proposal is executed as a webdriver API if it is possible through an Internet browser, and as a subprocess API if it is an application that can be executed directly on Window OS.

By functioning these APIs and learning how to use them with prompt engineering in ChatGPT, ChatGPT can generate python codes that output appropriate conversations as TTS and propose activities to the user according to the user's emotional state.

Below is an example of the use of pyttsx3, webdriver, and subprocess:

After the implementation of this part is completed, we will learn ChatGPT to generate the GUI using tkinter API to make it easier for users to choose the activities proposed by ChatGPT.